As collaborative robots give way to autonomous ones, the future is not as frightening as you might think, says Professor Elizabeth Croft, presenter at the Australian Engineering Conference 2018.

When her daughter came home with a textbook that said robots are designed by ‘scientists’, Professor Elizabeth Croft was very surprised. Most of the driving force behind robot technology and capability is coming from engineers, she says.

“I had a bit of a fit when I saw what the textbook said. I told my daughter, ‘No, actually, engineering is pushing the forefronts of robotics. Science, art and design all contribute and help us to think about it, but the engineering part is what allows us to continue to innovate,” said Croft, dean of the faculty of engineering at Monash University.

When Croft talks about the future of robotics, she’s not discussing the manned ‘collaborative’ machines that, for instance, help people on an assembly line to lift engine blocks into car bodies and that switch off when their operator is absent. She means fully autonomous robots. “Collaborative robots, or ‘cobots’, were passive in the sense that they would not act unless the operator put motive force into them,” she said. They were very safe because they were not autonomous. If the operator did not touch the cobot’s controls, it would stop.

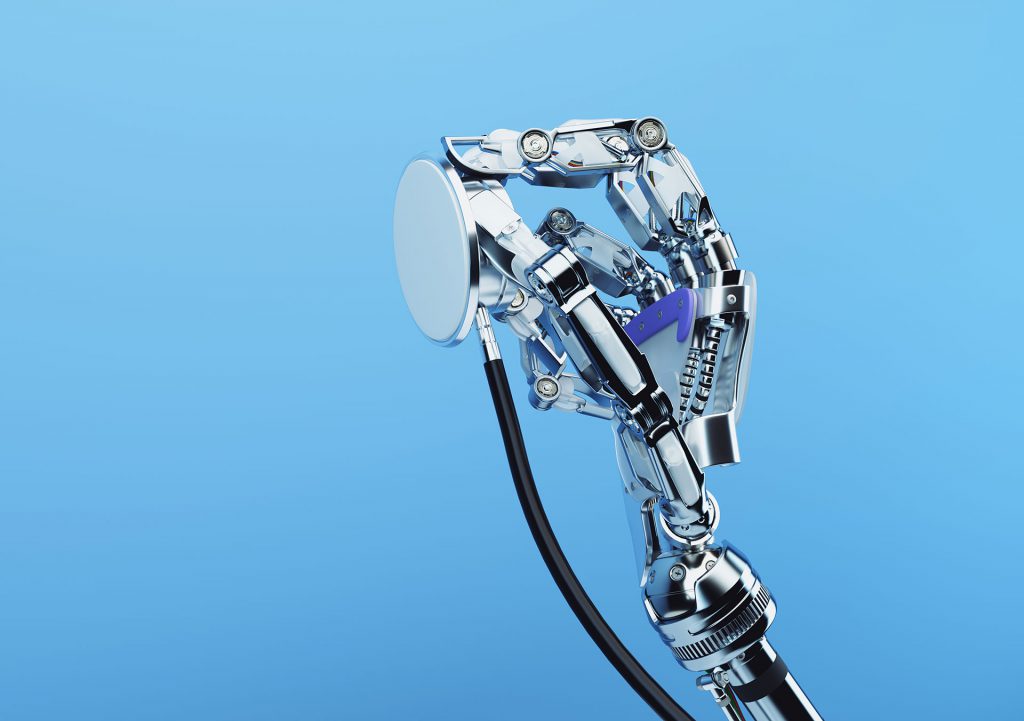

“Where we’ve moved is to a place where now we have autonomous robots that are independent agents, such as delivery robots, robots operating as assistants, etc.,” she said.

“This is the area that I focus on: robots that bring you something. Maybe they hand you a tool. Maybe they carry out parts of an operation that are common in a workplace. We’re interested in collaborating with those agents.”

These autonomous robots are different from cobots, Croft said, because they have their own agenda and their own intent. They are not tele-operated, and they are not activated or deactivated. They have their own jobs, just like people in the workplace. They need no permission to operate.

It’s in this area that Croft works, in the space where rules of engagement have to be figured out. Several major issues are slowing things down right now, such as questions around liability and safety frameworks. Also, how does the front-end work, or how do humans interact with the robot? How do they tell it what they want it to do? If voice operation is key, then we’re clearly not there yet, judging by the voice interactions with our smartphones.

And what about social and ethical impacts of technology in society? These are powerful, autonomous systems that are being developed, so how and where should boundaries be drawn to ensure Skynet doesn’t send a cyborg assassin to kill Sarah Connor?

“The underlying programming and bounding of how much autonomy those systems have, really impacts what consequences can happen,” Croft said.

“So, it is very important that students of this technology think about ethical frameworks in the context of programming frameworks. Ethics must underlie the basic design and concepts around how an autonomous system operates. That needs to be part of the fundamental coding, part of the training of an engineer.”

Reducing complication

In order to tone down the Terminator imagery, Croft offers an example of how an autonomous robot might change workflow for the better.

When you buy a piece of furniture from IKEA, the instructions contain a small picture of a man and look friendly, but they’re actually quite complicated. There are numerous pieces, many just a little bit different to each other. Some are very small, some are very large, some are flexible. The assembly requires dexterity and making of choices about what must be done in what order. Constant close inspection is a must because of the numerous dependencies.

“This job cannot be fully automated because it’s too expensive,” she said. “But there are parts of that operation where it would make a lot of sense to have more automation or assistance involved.”

Such technology is very close to reality right now, but we don’t have the legal and other frameworks to make it fully operational. “We’ve come to a place where people can grab onto a robot, move it around, show it an operation, then press a button and the robot does it,” Croft said.

“But because of legal issues, liability and occupational health and safety, there are risks that need to be managed. There are issues around getting the person and the robot to come together in a workspace in a safe way. Who’s responsible?

When the operator is always in charge, then there’s no doubt. But when the operator has no longer got their hand on the big red button, then there is risk.” Who assumes that risk? In Europe, Croft said, the risk is assumed mainly by the manufacturer of the robot, which creates a challenge for innovation. In North America, the risk is often assumed by the person or company that owns the robot. In other jurisdictions, the risk could be assumed by the worker who is using the robot.

Swapping robots with humans

Outside of the legal framework, the biggest issue is actually the workflow itself. On a typical production line for instance, if one worker can’t do a job, another is brought in to take their place. People are quickly interchangeable. The same needs to be true of a robot being replaced by a human. If the robot breaks down, the business can’t stop operating. So, humans and robots must be easily swapped in and out. There also needs to be a clear understanding of the value being offered by the robot, to ensure the worker is comfortable to work with the robot. And the worker must feel that the robot understands what they do, too. “It will become a greater and greater requirement for educators of people working in software engineering or computer engineering to create a real understanding of the impacts – ethically, socially, environmentally – of the designs they create,” Croft said.

“We’ll need professionals interested in public policy and engineers with a strong ethical framework. The engineers are creating the future of technology. We are the ones who first see the potential impacts. If we don’t prepare our people for that, we’ll see unintended consequences of the technology.”

Source: Create Digital

Reproduced with permission from Engineers Australia’s CREATE magazine. All rights reserved.