Organizations, for a very long time, have been struggling to balance security and openness to run the business efficiently.

The issue has been put to trial when organizations started using a large number of workforces to modernize business, once again when organizations had to use offshore resources to reduce costs of development and maintenance of the enterprise, and finally when they had to allow the remote working based on the global developments of the past two years.

Also, the past few years have not been kind to organizations, who must constantly evolve their business practices to be more agile and dynamic. Organizations rather than allowing only a few sections of the users to work “outside the perimeter” must now implement the open-access architecture, as practically the perimeter has vanished.

This is the slow change that was foreseen by few analysts over a decade ago and has been the talk of the visionaries for a long time now.

Introduction to Zero Trust (ZT)

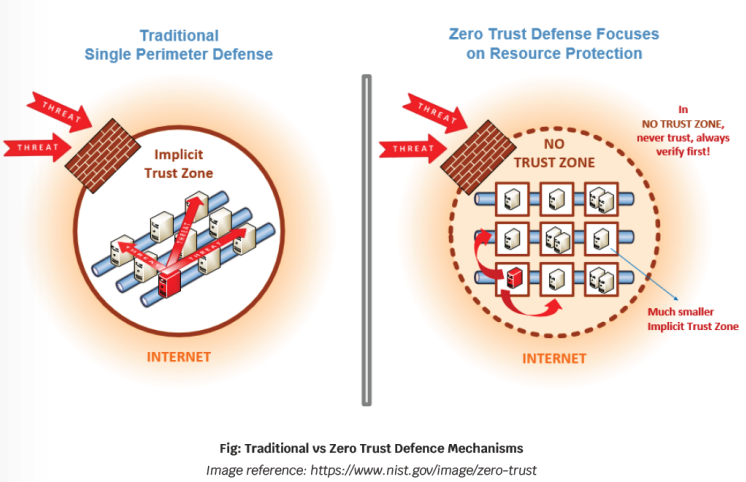

Zero Trust is a set of cybersecurity principles used to create a strategy that focuses on moving network defenses from wide, static network perimeters, to focusing more narrowly on subjects, enterprise assets (i.e., devices, infrastructure components, applications, virtual, and cloud components), individuals, and other non-human entities that request accesses to resources.

These principles are designed to prevent data breaches and limit internal lateral movement, in case of an attack from an external threat actor or malicious insider.

Before we proceed further, there is a basic concept we must be aware of. The term Zero Trust means Zero “Implicit “Trust.

This is important for understanding what the analysts and research teams imply in their discussions and it is one of the issues that we will discuss later in this article. For now, let us have look at the tenets of ZT and what does it really mean for businesses:

- Principle 1 – All entities are untrusted by default

- Principle 2 – Least privilege access is enforced

- Principle 3 – Comprehensive security monitoring is implemented

In accordance with NIST, the following principles are the technical extensions of the basic principles mentioned above:

- All data sources and computing services are considered resources

- The enterprise ensures all owned systems are in their most secure state possible

- All communication is secured regardless of network location

- Access to individual enterprise resources is granted on a per-connection basis

- User authentication is dynamic and strictly enforced before access

- Access to resources is determined by policy, including the observable state of the user, system, and environment

Now, these might not look very complicated, and that we have been doing them for a long time, but you must remember that these policies must be implemented with a business use case as the driving force and basic security parameters in mind, as in you would not spend $100 to protect a resource that is worth $50. Essentially, context matters and we must carefully design the enterprise architecture so that we can have complete information about resources, users that are trying to interact with it, activities that are done in said interaction, and all related policies that are implemented to inhibit all users that are not supposed to access the resource in the first place.

The evolution of Zero Trust, essentially, is the natural progression of security posture management for organizations. This is the agile way of handling security as it focuses on users, assets, and resources rather than perimeter, because traditional mechanisms were not dynamic enough for an organization to function effectively and securely. It has been an extreme pleasure to see that organizations have become more aware of the fallout from security breaches, and thus, actively discuss security requirements with business teams. Zero Trust Architecture (ZTA) has been in the making for over a few decades now and will continue to evolve based on the requirements and risk appetite of organizations.

As previously mentioned, we must pay extra care to the implicit portion of the Zero Trust. This, simply put, helps us define the portion of the resources that can still allow access to the users based on the previously established trust. This, again, paired with the risk appetite of the organization usually drives the use case requirements of the organizations. Thus, they must have a detailed risk analysis of resources, to derive inherent risks of components that make the enterprise applications. This will help organizations to better allocate time and efforts to have continuous diagnostics and mitigation plans for attacks on resources.

Now, this brings us to a very important portion of the discussion. Continuous monitoring, PKI, IAM, SIEM, UEBA, network micro-segmentation, etc., are the usual terms that are thrown across, and somehow, we are not able to make the impact we thought that we would.

These have been around for a long time now, so what are we trying to achieve here? We are really missing the term context in these discussions, which can help us better drive the message home. With adequate context, organizations would be able to better decide if a user; is trying to access the application as a normal or as a privileged user, is executing commands and actions that follow their usual behavior, is permitted to execute the commands from a network or geolocation they are currently present at, has enough proof to prove that they-are-who-they-say-they-are and the organization has no proof to believe otherwise.

The details to which an organization should follow can be derived from industry compliances that are binding to the organization. With these additional contexts, now the situation might seem complicated.

There are no two ways that an organization must do things to get it right. Usually, it is just a matter of creation and deployment of the security policies that would help organizations to be a bit more proactive rather than reactive as organizations have been on this path for a long time now and unknowingly majority of them have already completed the heavy lifting. We should now delve a bit deeper to understand why ZTA is the best refuge for security teams, in the era of constant change.

- Choice of security methodology: Organizations can pick and choose from a variety of ZTA approaches, which suits them best, as well as which can be implemented without any changes to the business flows (with obvious changes to the security flows). This is needed as any major disruption in the current business flow can cause more issues and can lead to resistance from the management teams, ultimately leading to the non-success of projects. A full ZT solution will include elements of all three approaches, i.e., enhanced identity governance driven, logical micro-segmentation, and network-based segmentation. These decisions about which approach best suits the organization are usually driven by the use cases that the organization wants to cater to, their existing business, and security policies. This presents a great opportunity for organizations to better adopt a framework with the least resistance from business teams.

- Excellent user experience without compromising security: Usually it is tough for organizations to balance the security with openness of the resources, which usually causes friction once new security measures are introduced. However, the framework allows organizations to enhance user experience, in the same way as the introduction of passwordless logins, while simultaneously allowing security teams to be proactive in their detection of user’s network and geolocation, as well as permissions to log in to resources with desired rights on respective resources.

- Customer and Business data protection: With the definition of data as a resource being ingrained in the principles of ZTA, it finally receives the attention that it deserves. This helps organizations to have better knowledge and control over the data being handled, allowing them to create a detailed map of data, their types, their custodian, their retention policies, etc., and tying all of them back to a user and their activities over them. This holistic approach of handling data along with identities, again, has been in the making for the past couple of years, giving rise to data access governance which can complement audits of users’ access to other resources.

- Detect breaches rapidly by gaining greater visibility of enterprise traffic: This may seem like a regular activity for an enterprise, but the visibility that the security teams needed in the network traffic had been blurred by the implicit trust zones that existed in previous modules. This usually led to difficulty in understanding if their trusted user is malicious or not, if service accounts created for applications were being used by a person or not, whether a user’s location and activities were consistent with the past behavior or not, etc. Discussions of these parameters, along with the availability of logging from last-mile resources are helping organizations to have a holistic view of resources spread across the enterprise and defining detective and corrective actions for breaches.

There are various firms that have created solutions for ZTA methodologies, which can cater to the security requirements of hybrid infrastructures as well. The recent increase in the adoption of cloud services has also led to a host of solutions that can dramatically increase the reach of security teams, without needing costly deployments that used to take years. This has not only reduced the complexity of the implementation of security policies, but also eliminated security product fragmentation while reducing the number of trained resources needed to manage the complete infrastructure. ROI, on these solution deployments has, thus, been generally positive (within months of deployment), allowing the business team to pay proper attention to the security team’s requirements.

Is it just another buzzword?

Zero Trust is not just another buzzword in a neverending list of tech trends. When Zero Trust principles are implemented in any environment, it does lead to minimal exposure to cyber-attacks, higher continuity of critical processes, increased and cost-effective compliance, and a ‘future-proof’ architecture that is agile enough to keep up with businesses’ requirements.

ZTA principles have allowed security teams to be abreast with business requirements and act as a support mechanism to businesses rather than a deterrent. Lesser fragmentation in policy implementation, lower deployment costs, faster implementation, increased policy coverage, increased ROI, etc., are some of the pointers that are helping security teams to drive the conversation, rather than living in constant fear of attacks and exposures.

All good things come with associated snake-oil sellers and their fake promises. For them, ZTA is a product that is the answer to all the “hacks”, which only they can make.

We should also be aware of the term “Pure ZTA” implementation, which can be twisted to sound that there is only one right way to reach the destination of ZTA and all other approaches are meaningless. The idea is to make you aware of the situation and firms that are disingenuous in their messaging and often use looselycoupled implementation approaches of ZTA to cause confusion. Even though the terminology and some of the concepts might feel new, organizations have been on the path of implementation of ZTA, without even knowing it. Some of the examples can be; the implementation of Data Access Governance, UEBA, context and attribute-based authentication, etc. These, again, have been the priority for many organizations for the past few years now and security teams have been gearing towards them.

This is the natural evolution that security policies, for any organization, and the primary reason it has so many routes, is because these all lead to the same idea of reduction of the trust zone that a system or a person enjoys within the enterprise environment.

Technically, ZTA is just a control plane working on the data plane to create a very limited implicit trust zone. This would, thus, allow organizations to have a unified Rohit Kumar CISSP, MSc – Cyber Laws and Cyber Security Rohit has over 12 years working experience with IAM and ZTA methodologies and is currently associate with EY as solution architect for emerging technologies. He also has working experience on application virtualization, server virtualization, DevSecOps, and has worked with customers in various domains like BFSI, education, Oil and Gas, healthcare, etc. policy for Data Access, PKI, IAM, SIEM, etc., across all the resources, centrally created, deployed, and managed. These systems can be complemented with threat detection, remediation, and threat intelligence solutions, which can provide additional context to security teams and help them identify threats faster, minimize false positives, and reduce the time needed to respond to attacks.

However, ZTA, just like other principles, does have some drawbacks that an organization must be aware of:

- Subversion of ZTA Decision Process: If the system owner does remove a system before policy enforcement, the systems would continue to work and operate outside unified policies. This will cause a blind spot for security teams as it might not be possible for them to exercise direct control over these resources. Thus, it is always suggested to have continual audit of resources and record any configurational change made to them.

- Opaque Network flow: There are many network communications that do not allow deep packet inspection, which can obfuscate the attacker’s communication with resources. The issue can be exasperated if devices being used are not owned and managed by internal IT teams, who can apply agents that can help teams to have visibility into the network traffic. This can be mitigated with the help of analysis of metadata related to network flow, which can provide context to detect attacks on resources or any other malicious communications.

- System and Network information storage: Storage of information system, network, and resources are very important as it is needed to run the enterprise seamlessly. However, these become the easiest target of attackers, and thus, must be protected as valuable enterprise data, which comes with the associated access governance policies.

- Authentication issues: There are two aspects to authentication issues that an enterprise can face, the first being stolen credentials and insider threats, and the other being the usage of standard authentication mechanisms for APIs. These issues exist even in other security principles, however, some of these issues can be easily mitigated with the use of modern IAM policies like passwordless, context, and attributebased authentication. On the other hand, extensive use of automation, which in turn depends on APIs, can cause lenient authentication mechanisms to be used, like the use of API keys. These can be exploited by attackers who can use these keys to interact with resources with higher privileges and far less scrutiny.

ZTA is not the silver-bullet that organizations could use once and conclude the activity.

This is an evolving journey that requires organizations to take definitive steps to understand the use case at hand and best-suited methodologies that can be chosen for them, audit their systems to understand effectiveness, coverage, and changes that are needed to make process flows streamlined which are in compliance with business requirements.

Organizations have this golden opportunity to finally bring two sides of the coin together by working in harmony, where the security teams allow business teams to access all resources that they need, from places that they are designated to access from, after irrefutably proving their identity, and not spilling this trust to any other resources, essentially, keeping infrastructures safe from inherent harms of opening up.